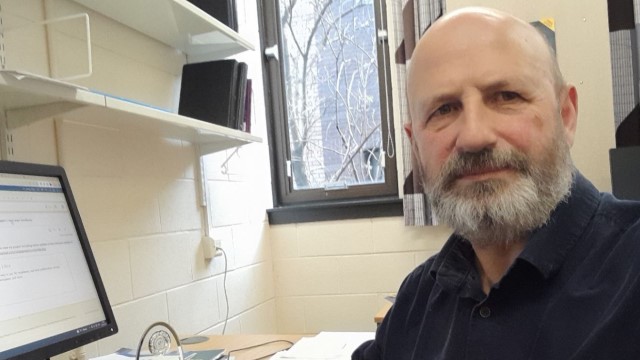

Head of the Department of Digital Automata Theory at the V. M. Glushkov Institute of Cybernetics of the NAS of Ukraine Doctor of Physical and Mathematical Sciences Oleksandr Letychevskyi in an interview with «RBC-Ukraine» (about the «Styler» project) talked about the future of AI (artificial intelligence), whether it can pose a real threat to humanity, what developments are being carried out in Ukraine, and how the latest AI-based technologies can work against us in this war.

Head of the Department of Digital Automata Theory at the V. M. Glushkov Institute of Cybernetics of the NAS of Ukraine Doctor of Physical and Mathematical Sciences Oleksandr Letychevskyi in an interview with «RBC-Ukraine» (about the «Styler» project) talked about the future of AI (artificial intelligence), whether it can pose a real threat to humanity, what developments are being carried out in Ukraine, and how the latest AI-based technologies can work against us in this war.

The scientist noted: “Let’s define what artificial intelligence is. Neural network technology and machine learning technology are only part of artificial intelligence. As early as the 1960s, Viktor Glushkov defined AI primarily as the ability to derive facts from knowledge, not just machine learning based on statistical algorithms. For example, Chat GPT learns from phrases. AI does not think; it selects words according to statistics, but this is a very successful choice. There is no semantics in the choice of words. A large amount of training can yield results, but since there is no semantics, there is a possibility of error. Humans have a deductive way of perceiving the world. They also learn from examples, empirical observations, but they determine the semantics of knowledge, whereas neural network technology does not. (...) I see the further development of artificial intelligence exclusively in the combination of neural networks and deductive thinking.”

Oleksandr Letychevskyi explained how real the threat of humanity’s destruction by AI is: “Humanity can be civilizationally destroyed if AI actively participates in social networks and influences ordinary users. The degree to which we allow it to interfere in human life is what it will be. Because if we allow it to launch rockets and make such decisions based on artificial intelligence, then of course — that is a danger. But talks about some fantastic robots appearing that will destroy everything — that is still far off. Although there has already been a case when a robot in a warehouse mistakenly took a person for a product and crushed them with its manipulator. Therefore, this should not be controlled exclusively by neural networks. If someone allows this in critical areas, it will be a crime. (...) But the greatest danger is the propaganda of the “russkiy mir” that can be in social networks. You can reprogram and train Chat GPT, because it is an accessible technology. And this is very dangerous.”

Read the full interview text

Source: media platform «RBC-Ukraine»